Most Recent Snowflake ADA-C01 Exam Questions & Answers

Prepare for the Snowflake SnowPro Advanced: Administrator Certification exam with our extensive collection of questions and answers. These practice Q&A are updated according to the latest syllabus, providing you with the tools needed to review and test your knowledge.

QA4Exam focus on the latest syllabus and exam objectives, our practice Q&A are designed to help you identify key topics and solidify your understanding. By focusing on the core curriculum, These Questions & Answers helps you cover all the essential topics, ensuring you're well-prepared for every section of the exam. Each question comes with a detailed explanation, offering valuable insights and helping you to learn from your mistakes. Whether you're looking to assess your progress or dive deeper into complex topics, our updated Q&A will provide the support you need to confidently approach the Snowflake ADA-C01 exam and achieve success.

The questions for ADA-C01 were last updated on Jan 21, 2025.

- Viewing page 1 out of 14 pages.

- Viewing questions 1-5 out of 72 questions

The following commands were executed:

Grant usage on database PROD to role PROD_ANALYST;

Grant usage on database PROD to role PROD_SUPERVISOR;

Grant ALL PRIVILEGES on schema PROD. WORKING to role PROD_ANALYST;

Grant ALL PRIVILEGES on schema PROD. WORKING to role PROD_SUPERVISOR;

Grant role PROD ANALYST to user A;

Grant role PROD SUPERVISOR to user B;

What authority does each user have on the WORKING schema?

A Snowflake Administrator has a multi-cluster virtual warehouse and is using the Snowflake Business Critical edition. The minimum number of clusters is set to 2 and the

maximum number of clusters is set to 10. This configuration works well for the standard workload, rarely exceeding 5 running clusters. However, once a month the

Administrator notes that there are a few complex long-running queries that are causing increased queue time and the warehouse reaches its maximum limit at 10 clusters.

Which solutions will address the issues happening once a month? (Select TWO).

According to the Snowflake documentation1, a multi-cluster warehouse is a virtual warehouse that consists of multiple clusters of compute resources that can scale up or down automatically to handle the concurrency and performance needs of the queries submitted to the warehouse. A multi-cluster warehouse has a minimum and maximum number of clusters that can be specified by the administrator. Option A is a possible solution to address the issues happening once a month, as it allows the administrator to use a task to increase the cluster size for the time period that the more complex queries are running and another task to reduce the size of the cluster once the complex queries complete. This way, the warehouse can have more resources available to handle the complex queries without reaching the maximum limit of 10 clusters, and then return to the normal cluster size to save costs. Option B is another possible solution to address the issues happening once a month, as it allows the administrator to have the group running the complex monthly queries use a separate appropriately-sized warehouse to support their workload. This way, the warehouse can isolate the complex queries from the standard workload and avoid queue time and resource contention. Option C is not a recommended solution to address the issues happening once a month, as it would increase the costs and complexity of managing the multi-cluster warehouse, and may not solve the underlying problem of inefficient queries. Option D is a good practice to improve the performance of the queries, but it is not a direct solution to address the issues happening once a month, as it requires analyzing and optimizing the complex queries using clustering keys or materialized views, which may not be feasible or effective in all cases. Option E is not a recommended solution to address the issues happening once a month, as it would increase the costs and waste resources by starting more clusters than needed for the standard workload.

Which statement allows this user to access this Snowflake account from a specific IP address (192.168.1.100) while blocking their access from anywhere else?

An Administrator loads data into a staging table every day. Once loaded, users from several different departments perform transformations on the data and load it into

different production tables.

How should the staging table be created and used to MINIMIZE storage costs and MAXIMIZE performance?

According to the Snowflake documentation1, a transient table is a type of table that does not support Time Travel or Fail-safe, which means that it does not incur any storage costs for maintaining historical versions of the data or backups for disaster recovery. A transient table can be dropped at any time, and the data is not recoverable. A transient table can also have a retention time of 0 days, which means that the data is deleted immediately after the table is dropped or truncated. Therefore, creating the staging table as a transient table with a retention time of 0 days can minimize the storage costs and maximize the performance, as the data is only loaded and transformed once, and then deleted after the production tables are populated. Option A is incorrect because creating the staging table as an external table, which references data files stored in a cloud storage location, can incur additional costs and complexity for data transfer and synchronization, and may not provide the best performance for data loading and transformation. Option C is incorrect because creating the staging table as a temporary table, which is automatically dropped when the session ends or the user logs out, can cause data loss or inconsistency if the session is interrupted or terminated before the production tables are populated. Option D is incorrect because creating the staging table as a permanent table, which supports Time Travel and Fail-safe, can incur additional storage costs for maintaining historical versions of the data and backups for disaster recovery, and may not provide the best performance for data loading and transformation.

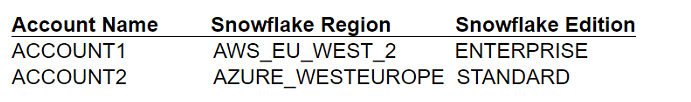

A Snowflake organization MYORG consists of two Snowflake accounts:

The ACCOUNT1 has a database PROD_DB and the ORGADMIN role enabled.

Management wants to have the PROD_DB database replicated to ACCOUNT2.

Are there any necessary configuration steps in ACCOUNT1 before the database replication can be configured and initiated in ACCOUNT2?

According to the Snowflake documentation1, database replication across accounts within the same organization requires the following steps:

* Link the accounts in the organization using the ORGADMIN role.

* Enable account database replication for both the source and target accounts using the SYSTEM$GLOBAL_ACCOUNT_SET_PARAMETER function.

* Promote a local database to serve as the primary database and enable replication to the target accounts using the ALTER DATABASE ... ENABLE REPLICATION TO ACCOUNTS command.

* Create a secondary database in the target account using the CREATE DATABASE ... FROM SHARE command.

* Refresh the secondary database periodically using the ALTER DATABASE ... REFRESH command.

Option A is incorrect because it does not include the step of creating a secondary database in the target account. Option C is incorrect because replicating databases across accounts within the same organization is not enabled by default, but requires enabling account database replication for both the source and target accounts. Option D is incorrect because it is possible to replicate a database from an Enterprise edition Snowflake account to a Standard edition Snowflake account, as long as the IGNORE EDITION CHECK option is used in the ALTER DATABASE ... ENABLE REPLICATION TO ACCOUNTS command2. Option B is correct because it includes all the necessary configuration steps in ACCOUNT1, except for creating a secondary database in ACCOUNT2, which can be done after the replication is enabled.

Unlock All Questions for Snowflake ADA-C01 Exam

Full Exam Access, Actual Exam Questions, Validated Answers, Anytime Anywhere, No Download Limits, No Practice Limits

Get All 72 Questions & Answers